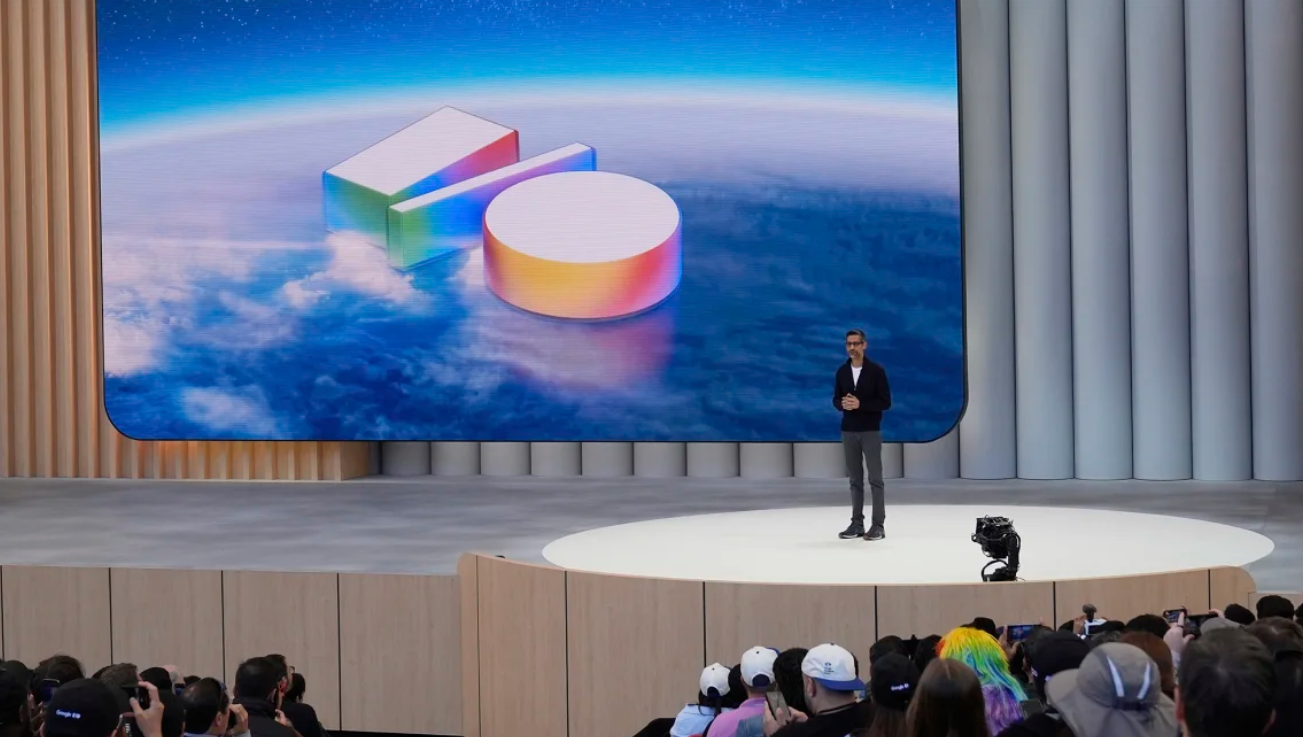

Google is redefining how people interact with its search engine, moving beyond traditional keyword searches toward intelligent, personalized digital assistants. The tech giant announced major changes at its annual developer conference, signaling a bold shift in how users will find and interact with information online.

Instead of typing keywords into a search bar and skimming through links, the new Google experience will rely more on AI tools capable of understanding context, preferences, and even a person’s real-world surroundings. These tools aim to act more like digital agents than passive search engines.

This transformation comes at a time when competitors like ChatGPT, Perplexity, and other AI-powered services are reshaping how people search for information. Google’s latest updates represent a strong response to that growing competition.

Enter AI Mode

One of the most notable announcements is the broader release of “AI Mode” within the Google app, previously limited to early testers. Now available to all U.S. users, AI Mode is designed to offer smarter, more context-aware responses.

Unlike standard searches, AI Mode breaks down queries into related subtopics and performs additional background searches to refine the answers. Soon, it will begin using users’ search histories and data from other Google services, such as Gmail, to make responses even more personalized.

Two experimental features within AI Mode will remain under testing via Google’s Labs program: a task-handling function and real-time visual search. The first feature, powered by Google’s Project Mariner, will handle multi-step tasks like finding and booking affordable event tickets or restaurant reservations — acting more like a virtual assistant.

The second experimental feature enhances visual search. While Google Lens already allows users to search by snapping photos, this new tool uses the phone’s camera to interact in real time. For example, users can show Google a bolt and ask whether it fits a specific bike frame — a feature particularly helpful for situations hard to describe in words.

Merging AI Search and Gemini Assistant

Some of these features also overlap with Google’s Gemini AI assistant, creating a bit of confusion between the platforms. According to Google executives, search remains focused on learning and information discovery, while Gemini is aimed more at productivity tasks like generating code or writing documents.

Still, Google is expanding Gemini’s visual capabilities to iPhone users, reinforcing the belief that visual and conversational search are both central to its future strategy.

Navigating Growing Competition

Google’s dominance in search is under more pressure than ever. Rivals like OpenAI, Apple, Microsoft, and Amazon are heavily investing in their own AI tools, pushing users toward new platforms and away from traditional search engines.

OpenAI has already launched its own AI-powered search product. And recently, Apple revealed that Google’s search usage in its Safari browser declined for the first time since 2002 — an unusual milestone that hints at changing user behavior. Although Google disputes that claim and insists search queries are still growing overall, market analysts predict that traditional search engine traffic may drop by 25% by 2026 due to AI alternatives.

Despite these shifts, Google CEO Sundar Pichai remains optimistic. “We’re entering a new phase of AI where decades of research are becoming everyday reality,” he said during a media briefing.

He envisions a future shaped by proactive digital agents, making online interactions faster, smarter, and more intuitive. “All of this,” Pichai added, “will keep getting better.”