A coalition of major philanthropic funders, including the Gates Foundation and the Ballmer Group, has announced a bold $1 billion investment over the next 15 years to develop artificial intelligence (AI) tools that will support frontline professionals such as public defenders, social workers, parole officers, and others who serve Americans facing difficult economic and social challenges.

The initiative will be carried out through a newly established entity called NextLadder Ventures, which will provide both grants and investments to nonprofit and for-profit organizations working on AI-driven solutions. The aim is to equip these overburdened professionals—often managing massive caseloads with limited resources—with better tools to improve outcomes for the people they serve.

Brian Hooks, CEO of Stand Together—a nonprofit founded by billionaire Charles Koch—emphasized that the goal is not only to use cutting-edge technologies but to ensure those solutions are developed with the people who are living through economic hardship. “The hundreds of entrepreneurs we’ll support are not building for people—they’re building with them,” Hooks said.

Other supporters of the initiative include hedge fund manager John Overdeck, the Valhalla Foundation (launched by Intuit co-founder Scott Cook and Signe Ostby), and the Ballmer Group, the philanthropic venture of former Microsoft CEO Steve Ballmer and his wife, Connie. Specific financial contributions by each organization were not disclosed.

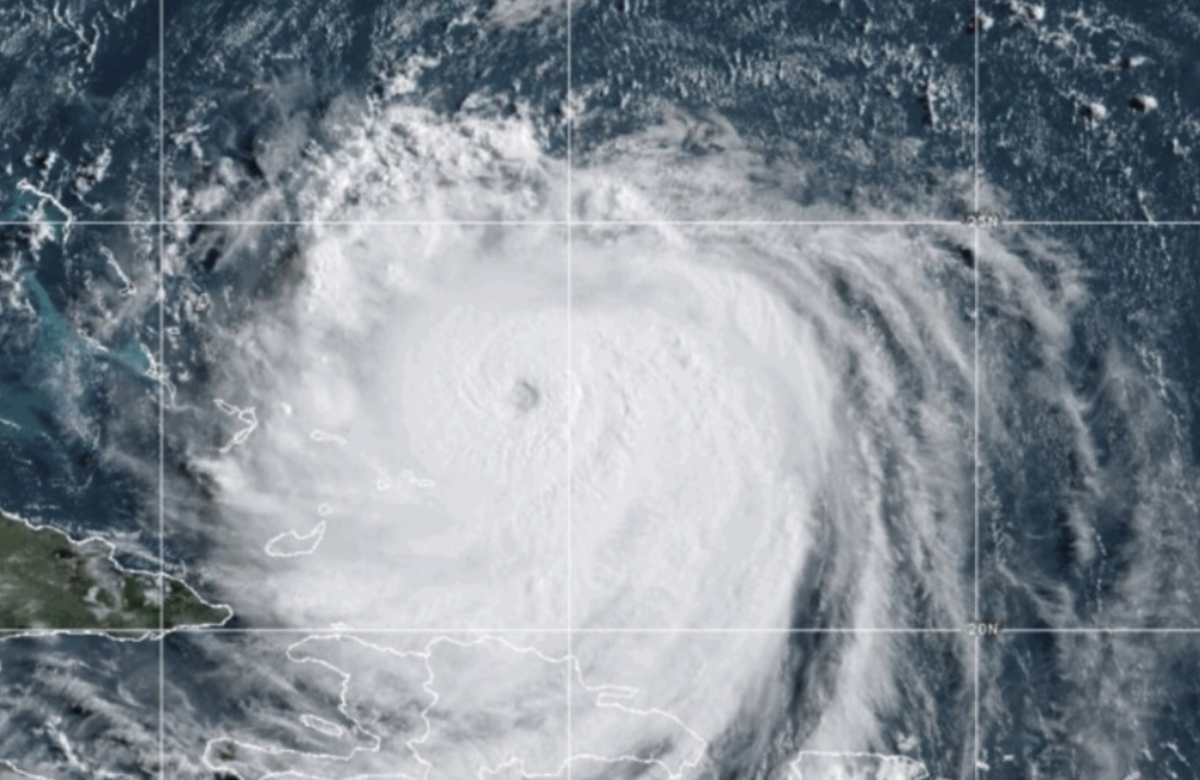

The overarching mission behind this investment is to promote economic mobility. Funders believe AI can play a critical role in making processes more efficient and accessible—such as helping match individuals with assistance after a disaster or enabling parole officers to process paperwork faster for those who’ve met eligibility requirements but are stuck in bureaucratic limbo.

Kevin Bromer, who leads technology and data strategy at the Ballmer Group, said the collaboration emerged from a shared recognition of gaps in the sector. He will serve on the board of NextLadder, which will also include three independent board members along with representatives from partner organizations.

Ryan Rippel, who previously led the economic mobility portfolio at the Gates Foundation, will head NextLadder. The organizational structure—whether nonprofit or for-profit—is yet to be finalized. However, any profits from investments will be reinvested into future initiatives.

Tech for Good expert Jim Fruchterman, founder of Tech Matters, praised the initiative’s focus on empowering rather than replacing frontline workers. He noted that working closely with these professionals to identify the most frustrating and inefficient parts of their job is likely to result in meaningful impact. “If we’re helping frontline workers do their job better, instead of using AI on poor people, then it’s a step in the right direction,” he said.

NextLadder is partnering with AI company Anthropic, which will provide technical expertise and access to its tools. Elizabeth Kelly, head of beneficial deployments at Anthropic, stated that the company has committed $1.5 million annually to support the initiative and will provide the same level of service to grantees as it does to its major corporate clients. “We want to guide and support nonprofits using Claude with real care,” she said, referring to Anthropic’s large language model.

According to Hooks, philanthropy plays a crucial role in de-risking innovation and giving new ideas time to evolve. “If we do this right, our capital will prove what’s possible,” he said.

However, the rise of AI in sensitive human-service sectors brings new ethical concerns. Suzy Madigan, Responsible AI Lead at Care International UK, cautioned that AI deployment in humanitarian or social work contexts can unintentionally deepen inequalities if not handled properly. “The solution is to involve affected communities at every step,” she said. “AI must enhance human work—not replace it.”

Research from groups like the Active Learning Network for Accountability and Performance (ALNAP) has highlighted the need for rigorous testing and governance when deploying AI in high-risk, high-impact situations. Experts recommend carefully evaluating whether AI is the right tool, ensuring it performs accurately, protects user privacy, and avoids locking organizations into dependency on a single provider.

Similarly, the National Institute of Standards and Technology (NIST) stresses the importance of trustworthy AI—tools that are explainable, accountable, and reliable in their decision-making processes.

Hooks concluded by reinforcing the need to ensure that people facing economic struggles are not left out of technological advancements. “Denying these communities access to the benefits of next-gen solutions is simply unacceptable,” he said.

Also Read:

Japan Posts Trade Deficit as Trump’s Tariffs Weigh on Exports

U.S. Manufacturing Remains Sluggish Despite Biden’s Subsidies and Trump’s Tariffs